-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SSD Pools - Use cases?

- Thread starter dgrab

- Start date

What kind of TrueNAS operations benefit from high IOPS?SSD's have much higher IOPS than HDD's.

SSD's in RAIDZ'n' still have higher IOPS than mirrored HDD's and have better space efficiency than mirrors whilst maintaining parity

So if you need more IOPS than HDD's in any form SSD's are a valid use case

I notice you have all kinds of SSDs, both in your main storage pool, SSD pool, AppPool and a Scratch SSD. Are you able to further elaborate on how they're used beyond SLOG and L2ARC? Do you use just one Optane 900P as a slog device? Doesn't the TrueNAS documentation recommend using a mirror for SLOG?

Do L2ARC drives have to be MLC like the documentation seems to imply? Would the usual Samsung 870 EVO or Crucial MX500 not suffice?

My TrueNAS installation has an HBA card capable of connecting up to 8 SATA drives. Four of them will be 12TB Seagate Ironwolfs (haven't decided between striped mirrors or raidz1 for the config) but I still have four more SATA ports from the second SAS port to use. I think connecting them to SSDs would make more sense than more HDDs because I really don't need more large storage drives, but just looking for ideas on what to set up. There is a pretty good deal for 1TB Crucial MX500 SSDs on Amazon in my country, but from what I read on the TrueNAS documentation, consumer SATA SSDs don't seem like the best choice for zfs's unique caching features.

For what it's worth, my main TrueNAS use case is storing large 3D projects and assets, and accessing them over the network. Also continually archiving data for family and friends. My TrueNAS is virtualized, and it's hypervisor (Unraid) is what handles media server duties and other more recreational functions.

- Joined

- Jan 1, 2016

- Messages

- 9,700

It would be important to consider how you are providing disks to the TrueNAS guest OS... Are you passing through the entire HBA?My TrueNAS is virtualized, and it's hypervisor (Unraid) is what handles media server duties and other more recreational functions.

Unless that's the case, you are likely set up to lose any data you have stored on TrueNAS at some point.

Of course.It would be important to consider how you are providing disks to the TrueNAS guest OS... Are you passing through the entire HBA?

- Joined

- Nov 25, 2013

- Messages

- 7,776

- Joined

- Apr 16, 2020

- Messages

- 2,947

AppPool - 2 SSD's. Main use for this is ix-applications and docker config files. Keeps the docker stuff snappyWhat kind of TrueNAS operations benefit from high IOPS?

I notice you have all kinds of SSDs, both in your main storage pool, SSD pool, AppPool and a Scratch SSD. Are you able to further elaborate on how they're used beyond SLOG and L2ARC? Do you use just one Optane 900P as a slog device? Doesn't the TrueNAS documentation recommend using a mirror for SLOG?

Do L2ARC drives have to be MLC like the documentation seems to imply? Would the usual Samsung 870 EVO or Crucial MX500 not suffice?

My TrueNAS installation has an HBA card capable of connecting up to 8 SATA drives. Four of them will be 12TB Seagate Ironwolfs (haven't decided between striped mirrors or raidz1 for the config) but I still have four more SATA ports from the second SAS port to use. I think connecting them to SSDs would make more sense than more HDDs because I really don't need more large storage drives, but just looking for ideas on what to set up. There is a pretty good deal for 1TB Crucial MX500 SSDs on Amazon in my country, but from what I read on the TrueNAS documentation, consumer SATA SSDs don't seem like the best choice for zfs's unique caching features.

For what it's worth, my main TrueNAS use case is storing large 3D projects and assets, and accessing them over the network. Also continually archiving data for family and friends. My TrueNAS is virtualized, and it's hypervisor (Unraid) is what handles media server duties and other more recreational functions.

BigPool - Bulk Storage. Mirrors for improved IOPS and ease of expansion as I also use this for bulk but slower iSCSI & NFS Storage for ESXi - but mostly bulk storage. I also use a special vdev to accelerate metadata access and small file access in one specific dataset

SSDPool - Mirrored SSD's. Main iSCSI storage for ESX - I don't have the luxury of NVMe SSD's - so this is the best / fastest I can do

ScratchSSD - Single SSD. Used as unimportant storage, transcode folders that sort of thing

I have 2 Optane's I use 1 as a SLOG on SSDPool and one of HDDPool. All of SSDPool is sync writes, but only certain datasets on HDDPool. This is a home system so I don't need to mirror the SLOG. If the SLOG fails - then it just slows things down (unless the failure is during an unexpected / unplanned panic or power outage, in which case I may lose data). I do have a UPS

I do have an L2ARC on the HDDPool - mostly playing around. With 256GB RAM (128GB (ish) in Scale speak) I don't really need an L2ARC. I just had the drive lieing around unused. L2ARC's do not get hit that hard and I wouldn't have an issue using a reasonably good consumer grade SSD as L2ARC

My server has only 32GB of ECC ram, and because I'm currently virtualizing TrueNAS, this of course means I'm assigning even less (8GB as of right now with 3x3TB Drives). I also do not have a 10GbE network setup. I'm still very new to TrueNAS and zfs and just trying to figure out exactly what works for the hardware I have.@dgrab how much memory do you have? Do you have 64 G or more and still experience ARC misses? Only then will you possibly benefit from L2ARC.

I use dedicated SSD pools for jails and VMs and spinning disk pools for SMB filesharing. Also special vdevs for metadata profit from using SSDs.

I like the idea of VM-storing datasets with snapshots so I'll probably get around to setting up an SSD pool for that.

- Joined

- Dec 11, 2015

- Messages

- 1,410

Hello,

A long with points and ideas already made, I've a couple to add.

The scenario; there is a raidzX pool of hdds, and a couple of SSD's laying around to play with.

The main principles to design where data goes to what pool 1) performance requirement, 2) redundancy requirement

Let's play a little.

¤ Use SSDs for a second pool.

For example, run mirrors for improved block storage.

Try in Z1 to see if you experience any differences?

Run a single drive for downloads/temporary files that does not require redundancy. This has the benefit of not "clogging"/ encouraging fragmentation of your main pool.

Run preferably mirrors, to host VM's.

A "feature" I recently discovered with replications is that TN presents you with different benefits/challenges when there are more than pool in the box. Thus, there is learning to be had from a multiple pool box.

Play with a mirror + adding hot spare. Induce errors using (dd for example), or unplug a drive. See what happens and what state your box ends up in. Try to restore a clean state.

¤ Play with "add-ons".

This includes L2ARC,LOG,special vdev.

Note that few SSDs are suitable for LOG, (search and you'll discover).

Learn how to partition/split drives and add to multiple pools - Useful for an expensive LOG.

Play with how to create a degraded pool out the gate (resources available)

¤ Committ to a special vdev to your main pool.

Special vdevs are super powerful but will not be "removable" from your main pool, beware of that.

¤ setup the SSds on another machine, ubuntu for instance.

Setup ZFS there, and do a replication of a data set.

Try encryption. What goes on then?

There are so many avenues to explore.

The documentation is your source of possibilities, the resource section plentiful of ways to implement.

Cheers,

A long with points and ideas already made, I've a couple to add.

The scenario; there is a raidzX pool of hdds, and a couple of SSD's laying around to play with.

The main principles to design where data goes to what pool 1) performance requirement, 2) redundancy requirement

Let's play a little.

¤ Use SSDs for a second pool.

For example, run mirrors for improved block storage.

Try in Z1 to see if you experience any differences?

Run a single drive for downloads/temporary files that does not require redundancy. This has the benefit of not "clogging"/ encouraging fragmentation of your main pool.

Run preferably mirrors, to host VM's.

A "feature" I recently discovered with replications is that TN presents you with different benefits/challenges when there are more than pool in the box. Thus, there is learning to be had from a multiple pool box.

Play with a mirror + adding hot spare. Induce errors using (dd for example), or unplug a drive. See what happens and what state your box ends up in. Try to restore a clean state.

¤ Play with "add-ons".

This includes L2ARC,LOG,special vdev.

Note that few SSDs are suitable for LOG, (search and you'll discover).

Learn how to partition/split drives and add to multiple pools - Useful for an expensive LOG.

Play with how to create a degraded pool out the gate (resources available)

¤ Committ to a special vdev to your main pool.

Special vdevs are super powerful but will not be "removable" from your main pool, beware of that.

¤ setup the SSds on another machine, ubuntu for instance.

Setup ZFS there, and do a replication of a data set.

Try encryption. What goes on then?

There are so many avenues to explore.

The documentation is your source of possibilities, the resource section plentiful of ways to implement.

Cheers,

Alright I have some new questions, primarily in regards to hardware choice.

From what I understand, Optane drives are heavily recommended for ZIL. The TrueNAS documentation says "The iXsystems current recommendation is a 16 GB SLOG device over-provisioned from larger SSDs" - my question is, how large? If I were to create a raidz1 pool out of 4x12TB drives or mirrored vdevs, should I be looking for a certain sized Optane? I could get an Optane P1600X for a fairly reasonable price of $128 AUD, but it only has 118GB capacity.

If I didn't buy an Optane SSD or another suitable type of SSD, is using a consumer SSD/NVME drive for ZIL completely out of the question? I've read that the results are "terrible", but is it worse than literally writing directly to an HDD data pool?

I also have questions about sizing for the special vdev. I think that would be the most useful one for an entry-level pleb like myself, but I have found limited documentation regarding hardware recommendations. Will any old consumer SSD be sufficient for a special vdev? Or is it a much better idea to buy one of those Intel high-endurance server SSDs? Do special vdevs get heavy writes? I read that it's good to aim for 0.3% of the storage pool size, but I'm guessing it wouldn't hurt to overprovision that, especially if I opt into storing small blocks?

I have 4 more SATA cables to work with out of my SAS-HBA Card; I was thinking two for a special mirror, one for a separate single-SSD pool for downloads/jails and one leftover for a ZIL drive if I ever add one... except they don't connect over SATA, so I would probably just upgrade my motherboard if I went down that route (current motherboard has a grim M.2 situation).

From what I understand, Optane drives are heavily recommended for ZIL. The TrueNAS documentation says "The iXsystems current recommendation is a 16 GB SLOG device over-provisioned from larger SSDs" - my question is, how large? If I were to create a raidz1 pool out of 4x12TB drives or mirrored vdevs, should I be looking for a certain sized Optane? I could get an Optane P1600X for a fairly reasonable price of $128 AUD, but it only has 118GB capacity.

If I didn't buy an Optane SSD or another suitable type of SSD, is using a consumer SSD/NVME drive for ZIL completely out of the question? I've read that the results are "terrible", but is it worse than literally writing directly to an HDD data pool?

I also have questions about sizing for the special vdev. I think that would be the most useful one for an entry-level pleb like myself, but I have found limited documentation regarding hardware recommendations. Will any old consumer SSD be sufficient for a special vdev? Or is it a much better idea to buy one of those Intel high-endurance server SSDs? Do special vdevs get heavy writes? I read that it's good to aim for 0.3% of the storage pool size, but I'm guessing it wouldn't hurt to overprovision that, especially if I opt into storing small blocks?

I have 4 more SATA cables to work with out of my SAS-HBA Card; I was thinking two for a special mirror, one for a separate single-SSD pool for downloads/jails and one leftover for a ZIL drive if I ever add one... except they don't connect over SATA, so I would probably just upgrade my motherboard if I went down that route (current motherboard has a grim M.2 situation).

- Joined

- Dec 11, 2015

- Messages

- 1,410

Does not matter. The point is, there is no need for greater size than 16GB.how large?

Aim for 16GB useful space. Any larger, typically doesn't matter, and requires really specific cases. One's it setup, you can track how little it actually does via some scripts floating around.should I be looking for a certain sized Optane?

yes.completely out of the question?

Yes, actually your performance will be worse with the same amount of protection.but is it worse than literally writing directly to an HDD data pool?

It only matters for sync writes. Any other circumstance, it won't even be used. It is only there as a safety net. Like your UPS. If you don't have a UPS setup, you'd probably investigate that part before LOG.

Don't recall if this particular model has Power-Loss-Protection which is the key feature.I could get an Optane P1600X

The "perk" with optane is their low latency.

yes.Will any old consumer SSD be sufficient for a special vdev?

Provide the special vdev with desired redundancy. As in at least mirrors.

As I caer particularly about mine and happened to have SSD's laying around, I went for 3-way mirror.

noOr is it a much better idea to buy one of those Intel high-endurance server SSDs?

no, not in the general usecase. However I think this might differ when playing with dedup tables - I'm not sure.Do special vdevs get heavy writes?

That's not territory for your use case, other than strict playing. It is massively complex and full of trade ofs, and careful system design.

I use mine for small blocks. The beauty of sizing, is that it directly relates to your mix of files, and settings on each dataset.I read that it's good to aim for 0.3% of the storage pool size, but I'm guessing it wouldn't hurt to overprovision that, especially if I opt into storing small blocks?

Nothing should go bork if it overfills, it will simply not accept more files/metadata, and it'll be handled as if there was no special vdev.

My special vdev see about 30% use at this moment. Bumping small block size and record size + rewriting the dataset is required for changes. I'm quite happy the way it is. Massive improvements over none.

Back in the day the goto LOG were SATA's.ZIL drive if I ever add one... except they don't connect over SATA

@Dice Thank you for the comprehensive answer.

With the special vdev SSDs I think I'd still rather use something decent for performance rather than the cheapest DRAMless rubbish WD Green or Kingston A400.

I was originally thinking 2x MX500 because they're such good value but I've heard Crucial have done some dodgy things with this model over the years like mixing components without changing the SKUs. I use one for cache in my hypervisor and it keeps returning SMART errors too, specifically for Current_Pending_Sector (which I read was a known bug).

Would it be worth considering used SSDs? Specifically a couple of used Samsung 860 Pros? They are MLC drives and I can get a pretty good deal on Ebay for a couple. Obviously I'd be checking with the seller first that they're in good health. If not, I might just go with 2x Kingston KC600 unless someone suggests otherwise.

With the special vdev SSDs I think I'd still rather use something decent for performance rather than the cheapest DRAMless rubbish WD Green or Kingston A400.

I was originally thinking 2x MX500 because they're such good value but I've heard Crucial have done some dodgy things with this model over the years like mixing components without changing the SKUs. I use one for cache in my hypervisor and it keeps returning SMART errors too, specifically for Current_Pending_Sector (which I read was a known bug).

Would it be worth considering used SSDs? Specifically a couple of used Samsung 860 Pros? They are MLC drives and I can get a pretty good deal on Ebay for a couple. Obviously I'd be checking with the seller first that they're in good health. If not, I might just go with 2x Kingston KC600 unless someone suggests otherwise.

- Joined

- Dec 11, 2015

- Messages

- 1,410

Will not make a notable difference. Any SSDs will be fast enough to make an improvement over not having a special vdev, to a HDD pool (granted data composition can draw advantage of them).With the special vdev SSDs I think I'd still rather use something decent for performance rather than the cheapest DRAMless rubbish WD Green or Kingston A400.

Yes. Provide enough redundancy. The sketchier units, add redundancy.Would it be worth considering used SSDs?

I've a clump of Samsung EVO 850's which have been in and around since new, that is potentially all the way back to 2014 when the model was released.

I don't trust them particularly much, as SSDs tend to die waaay more sudden than HDDs.

Therefore I use 3x.

Good luck with getting the true story :DObviously I'd be checking with the seller first that they're in good health.

My favorite event on that topic, was a seller claiming all drives were 100% health, as checked with something like HD sentinel (can't remember, and don't I dont use windows), providing a series of screenshots. Sure, one of them claimed 100% health. Anotherone showed a raid card, and another one showed how there were <NO SMART DATA> at all reported through the controller.

IMO the most reasonable way is to get a few small, cheap SSDs and provide more redundancy. After all, ZFS main strength is turning cheap hardware into reliable storage solutions.

- Joined

- Dec 11, 2015

- Messages

- 1,410

I believe you will find value in this thread:

www.truenas.com

www.truenas.com

Rewrite pool in situ?

Hello, I'm planning to add two special vdevs to my main pool. To my understanding, in order to make use of them, I'd have to 'rewrite in situ' my pools data to populate the special vdevs. What would be the most efficient way to do this? A large chunk of my data is "too large to be doubled...

Fast enough for an improvement over no special vdev? I mean sure. DRAM would still provide just that extra bit of performance optimization and reliability though. DRAM SSDs with good controllers are more than affordable now, and have much better endurance than the cheap stuff.Will not make a notable difference. Any SSDs will be fast enough to make an improvement over not having a special vdev, to a HDD pool (granted data composition can draw advantage of them).

Yes. Provide enough redundancy. The sketchier units, add redundancy.

I've a clump of Samsung EVO 850's which have been in and around since new, that is potentially all the way back to 2014 when the model was released.

I don't trust them particularly much, as SSDs tend to die waaay more sudden than HDDs.

Therefore I use 3x.

Good luck with getting the true story :D

My favorite event on that topic, was a seller claiming all drives were 100% health, as checked with something like HD sentinel (can't remember, and don't I dont use windows), providing a series of screenshots. Sure, one of them claimed 100% health. Anotherone showed a raid card, and another one showed how there were <NO SMART DATA> at all reported through the controller.

IMO the most reasonable way is to get a few small, cheap SSDs and provide more redundancy. After all, ZFS main strength is turning cheap hardware into reliable storage solutions.

I'm not that keen on using x3 SSDs for a special vdev. I just don't have that many SATA connectors available I and have zero interest in upgrading motherboard to add another HBA card. SSDs have been pretty good for me over the years so I'm sure x2 will be more than sufficient, particularly if I choose my hardware wisely.

- Joined

- Jan 11, 2021

- Messages

- 415

Will not make a notable difference. Any SSDs will be fast enough to make an improvement over not having a special vdev, to a HDD pool (granted data composition can draw advantage of them).

[…]

Performancewise … true. But i would choose something with „real“ PLP and a decent controller.

I‘d stay away from SSDs with internal „accelerating“ DRAM caches, SLC driven „performance areas“ and dubious controller „background optimization“. If it gets interrupted …

You can‘t compensate that risk with any SLOG or UPS. If atomic writes are forcefully aborted, your metadata (and in the case of special vdev your pool) will be toast. ZFS won‘t „know“, since all that crap happens in the drive(s).

If it is business critical, I‘d buy enterprise/datacenter drives (with PLP) any time.

Last edited:

Interesting, so just running a special vdev at all entails a risk? A risk that wouldn't exist if you just keep all your zpool's metadata to the old data vdevs?Performancewise … true. But i would choose something with „real“ PLP and a decent controller.

I‘d stay away from SSDS with „accelerating“ DRAM caches, SLC driven „performance areas“ and dubious controller „background optimization“. If it gets interrupted …

You can‘t compensate that risk with any SLOG or UPS. If atomic writes are forcefully aborted, your metadata (and in the case of special vdev your pool) will be toast. ZFS won‘t „know“, since all that crap happens in the drive(s).

If it is business critical, I‘d buy enterprise/datacenter drives (with PLP) any time.

At least in my case, I'm a relatively casual home user. While I'm storing stuff I care about, I wouldn't say there's any "mission critical" data requiring constant uptime, and obviously I store backups. This is also why I'm quite comfortable virtualizing TrueNAS from my hypervisor, even though such practice is frowned upon by the zfs purists. I also live in an area with no natural disasters and no potential hazards interacting with the server.

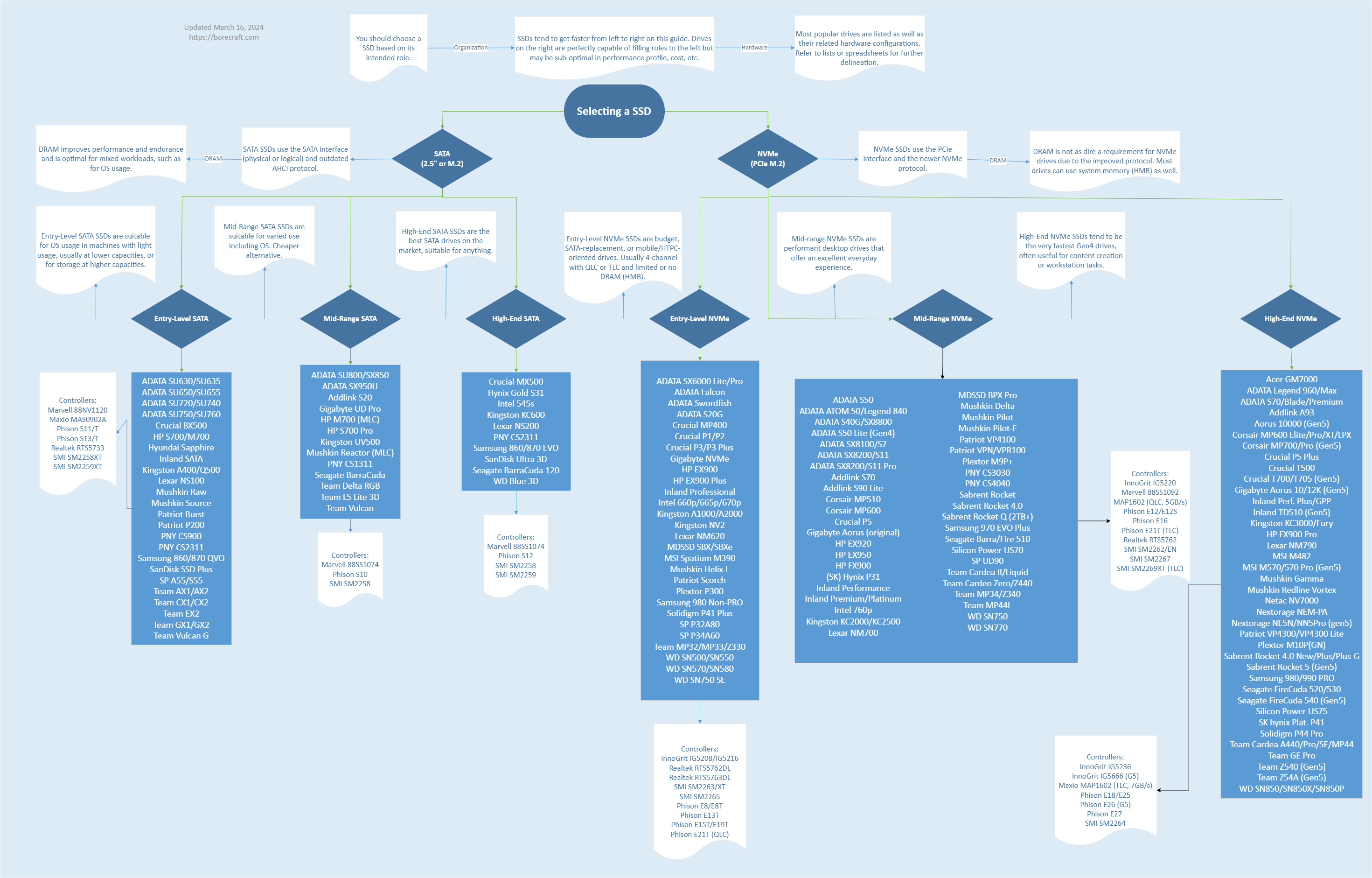

Out of curiosity, do you have an opinion on the borecraft SSD chart/buying guide?

SSDs

I don't know much about SSD controllers, but a lot of PC builders use this as a reference for what SSD to buy for their desktop builds. I don't know if it's a good guide for servers.

- Joined

- Dec 11, 2015

- Messages

- 1,410

The first and foremost protection from power loss is an UPS, period.You can‘t compensate that risk with any SLOG or UPS.

Correct. But this remains true for a pool without special vdev.If atomic writes are forcefully aborted, your metadata (and in the case of special vdev your pool) will be toast.

Correct, but to avoid seeding unnecessary doubts and confuse OP or the argument too much -If it is business critical, I‘d buy enterprise/datacenter drives (with PLP) any time.

Your main point is essentially to avoid using crap drives, because, crap is worse than premium drives. To make it clear, this argument is difficult to argue against, but really is not a dependency in the case of special vdevs.

I wouldn't say so.Interesting, so just running a special vdev at all entails a risk? A risk that wouldn't exist if you just keep all your zpool's metadata to the old data vdevs?

If you loose/corrupt metadata writes to your hdd vdevs or loosing them to the special device vdev of SSDs is equally catastrophic.

One may of course argue that any added complexity is a way of additional points of failure.

I'd say the main reason special vdevs are not as popular around the forums is there is not much use for them for most people. Add a bit more complexity and additional hardware in typically already <device clogged servers> where drive slots needs to be economized, it oftentimes makes little sense. In some data configurations even less sense. It all depends on the circumstances.

- Joined

- Jan 11, 2021

- Messages

- 415

Interesting, so just running a special vdev at all entails a risk? A risk that wouldn't exist if you just keep all your zpool's metadata to the old data vdevs?

A special vdev can hold metadata, dedup data and small io. With the small io "caching" feature you can even control via recsize whether a whole filesystem ist forced to a special vdev. (Beware, it should have the same ashift as the pool!)

So ... in a "regular" use case (without special vdev) metadata is (a) distributed (over multiple disks relative to the redundancy level) to a reserved area on the pool in (b) multiple copies (again relative to the redundancy level). How much redundancy has a single "special vdev" built from a single drive? I'd not go below tripple mirror. (Doing backups is mandatory anyway.)

Well. Yeah. But it's just hardware. Imagine, it's crashing from a dying PSU or a kernel crash or ... (fill in anything you could think of). If you don't care, fine. Do, whatever you want. I would have been glad, somebody told me to be careful, when I did my first ReiserFS-based file server.At least in my case, I'm a relatively casual home user. While I'm storing stuff I care about, I wouldn't say there's any "mission critical" data requiring constant uptime, and obviously I store backups. This is also why I'm quite comfortable virtualizing TrueNAS from my hypervisor, even though such practice is frowned upon by the zfs purists. I also live in an area with no natural disasters and no potential hazards interacting with the server.

[...]

Last edited:

- Joined

- Jan 11, 2021

- Messages

- 415

External power loss. If your PSU dies, it's still power loss. "Nice UPS you got there."The first and foremost protection from power loss is an UPS, period.

[...]

Last edited:

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "SSD Pools - Use cases?"

Similar threads

- Replies

- 7

- Views

- 5K

- Replies

- 7

- Views

- 3K