Thanks for the reply. I tried to address most of this in my second post, although I realize its a longer read.

Like most things, it depends on your workload. If you will be using the hypervisor functions of TrueNAS, you probably want more cores.

Planning on using Proxmox as a HV, with TrueNAS core as a VM, then with hardware passthrough for the HBA's.

If you are using it just for storage (like I am), you probably want higher clock speeds and hyperthreading off. I think Samba is still single threaded, so that is someplace where the higher clock speed will make a difference.

SMB is single threaded. I know people say higher clock speeds for higher speed, but no numbers or guidelines are ever given (that I have found anyways). I was hoping someone could break that down and define it more. How much is needed?

I am curious about the relationship between clock speed, single threaded performance and SMB. While clock speeds have mostly stagnated, single threaded performance continues to improve at the essentially the same clock speeds. I wonder how much does this come into play.

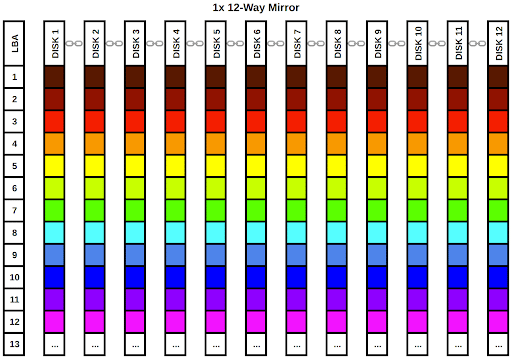

As far as saturating a 10G or 25G link, that will most likely depend more on pool construction than CPU. I find my bare metal storage only units are never CPU bound. I switched my pools from RAID-Z2 to mirrors, and that definitely helped.

That's helpful. Would this be on your primary NAS in your signature, with the Dual E5-2637 v4 @ 3.50GHz? I suppose there is no easy way to see how much cpu utilization there is per core during transfers (just the aggregate for all cores).

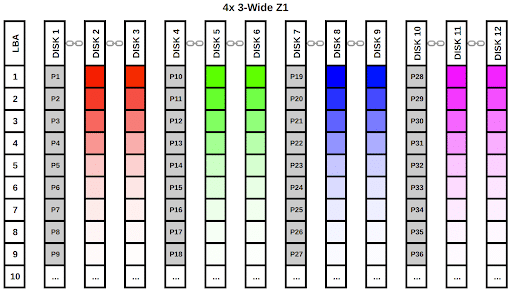

In my case, I am only really concerned about smb speed with the SSD pool. Under the current build sheet it will be comprised of 10 Crucial MX500 3.84TB drives, in 2x 5wide z1. So theoretical sequential read/write speeds of about 4400MB/s. It should be able to saturate 25g.

Although as shared, 25g really isn't needed. The only benefit is for large file transfers. This is more of an exercise in seeing whats possible and having fun.

As a general rule, RAID-Z1 is not recommended for larger drives since resilvering would take a long time and you would lose the entire pool if there was another drive failure during that process.

The Z1 is only for the SSD pool. My understanding is this is still considered a reasonable practice with SSD's, no? The non recoverable error rate is specified as 10^17. Although I do realize single parity can be a problem when a NRE does occur during rebuild.

In my case, both single parity flash storage pools (nvme mirrors and ssd z1) will be backed up to the HDD pool (2x parity), plus off site. Given the low MRE rate, reliability with flash, in conjunction with multiple backups, I feel safe (enough) with single parity for flash only pools.

If all the drives in a pool are NVME, the SLOG might not help as much. An SLOG only helps if synch is on.

Sync would be on for the VM pool.

In terms of the benefits of a SLOG where the underlying pool is already flash based media. I remember reading a thread here, where this questions was specifically asked (I wish I could still find it).

In the thread an experienced mod (may have been jgreco) mentioned that a SLOG can still be beneficial in situations like this. The reason given had to do with the write paths. The ZIL's write path still had to go through the pools write stack, which takes additional time since it's not optimized for pure speed. Where the SLOG write path is optimized for speed. So even when nvme is being used, a dedicated SLOG can still be faster, providing it isn't a bottleneck in and of itself.

EDIT: I found the thread:

Hi friends, I'm new in the community and with TrueNas too. At the moment I want to build a storage server and I had been reading some blog posts of other friends telling their experience and so on, but I would like to clarify something that may be very simple. I have in mind to create a ZFS...

www.truenas.com